In the early days of generative AI, the workflow was simple: prompt → result. You asked a chatbot to write a Python script or draft an email, and you accepted the output.

But as AI integration deepens into complex fields—software engineering, legal analysis, and creative writing—that single-track workflow is revealing its cracks. We are learning that the most effective way to use artificial intelligence is not to treat it as a solitary genius, but as a collaborative team.

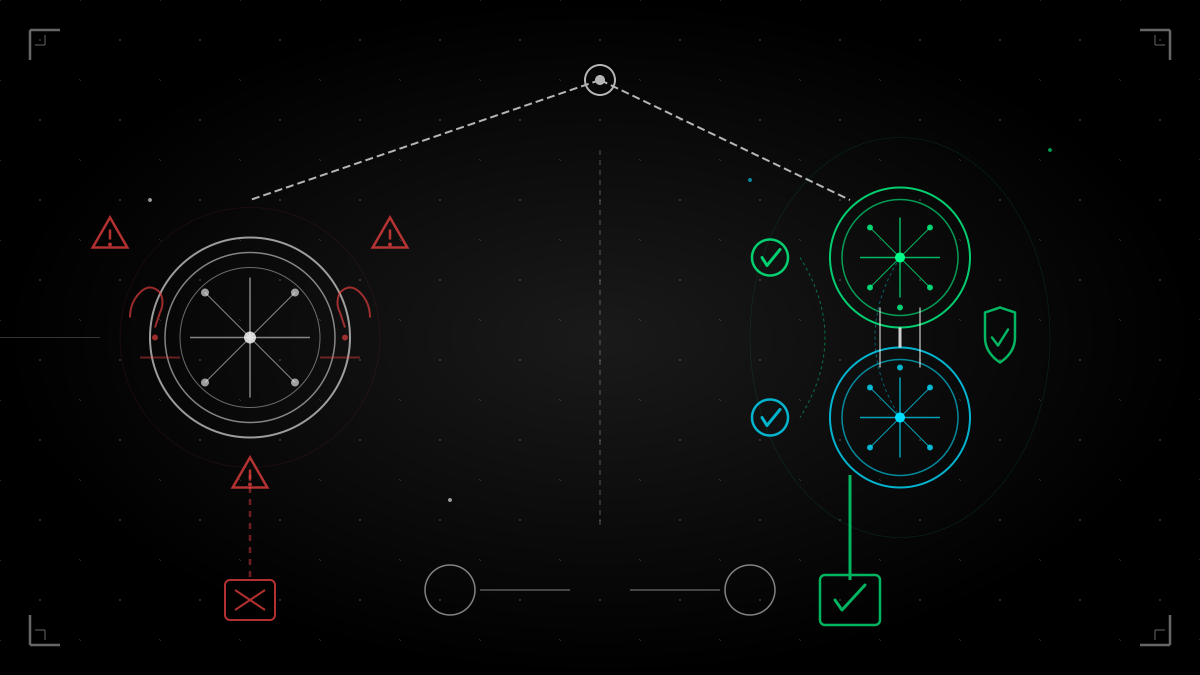

Handling tasks with two or more AI models—specifically separating the generator from the reviewer—is quickly becoming the gold standard for accuracy. Here is why the “two-brain” approach is so effective.

1. Breaking the “sunk cost” of context

One of the subtler weaknesses of large language models (LLMs) is how they predict text. An LLM generates content token by token, effectively “reading” what it has just written to decide what to write next.

If a model makes a small logic error early in a response, it is statistically more likely to double down on that error because it is now part of its context. It becomes biased by its own previous output.

Why a second model helps:

When you hand that code or text to a second AI model, you break that chain. The second model has no “sunk cost” in the generation process. It sees the output as a static block of text, freeing it to spot logic gaps that the first model was too “close” to see. It provides a genuinely fresh set of “eyes.”

2. The ensemble effect (cognitive diversity)

In data science, there is a concept called “ensemble learning,” where multiple models are used to predict an outcome. If Model A has an accuracy of 90% and Model B has an accuracy of 90%, their combined accuracy often exceeds 90%—provided they make different kinds of mistakes.

Different AI models (e.g., GPT-4o, Claude 3.5 Sonnet, Llama 3) have different architectures, training data, and safety alignments. They literally “think” differently.

Model A might be prone to verbosity but excellent at logic.

Model B might be concise but prone to skipping edge cases.

When Model B reviews Model A’s work, it is unlikely to share the exact same blind spots. The probability of two distinct models hallucinating the exact same incorrect fact is significantly lower than one model doing it alone.

3. Specialization: the “artist” and the “editor”

Human teams rarely have the same person write the code, test the code, and manage the project. We specialize. Multi-model workflows allow you to treat AIs as specialists rather than generalists.

A coding example

Imagine you are building a complex React component.

The Generator (e.g., Claude 3.5 Sonnet): Known for strong coding capabilities and verbose, helpful explanations. You ask it to write the code.

The Reviewer (e.g., GPT-4o or o1): Known for strong logical reasoning and adherence to instructions. You feed it the code from the generator and ask: “Find three security vulnerabilities or edge cases where this code might crash.”

If you ask the same model to review its own code immediately after writing it, it will often gloss over errors because it “thinks” the code is correct (after all, it just wrote it). A second model has no such ego.

4. The “adversarial” prompting method

This is perhaps the most powerful use case. You can set up two models to debate each other to reach a higher truth. This is often called “red teaming.”

The workflow:

- Model A generates a strategic plan or a legal argument.

- Model B is prompted to be a “ruthless critic” or “opposing counsel.” Its only job is to tear the argument apart.

- Model A (or a third Model C) then rewrites the plan addressing those specific criticisms.

This dialectic process forces the AI to move beyond the most probable (average) answer and toward a robust, defensible solution.

Summary: How to implement this today

You don’t need complex software pipelines to use this technique. You can do it manually in your browser tabs.

- Draft your content or code in your primary LLM.

- Copy the output.

- Paste it into a different LLM with the prompt: “Review this for [clarity/bugs/logic]. Be highly critical and list specific improvements.”

By introducing a second “brain” into the loop, you move from artificial intelligence to artificial collaboration—drastically reducing hallucinations and improving the resilience of your work.

Hi, I’m Owen! I am your friendly Aussie for everything related to web development and artificial intelligence.